We are excited to co-post an essay written by Patrick Anderson of the Substack Ever Not Quite. Patrick is a faithful reader of the Savage Collective, and we have also been reading and enjoying his essays. We have lots in common. He writes primarily about technology and humanism. This essays applies his thinking to impact of AI on work and labor. So, we felt like this was a good match. The piece focuses more on office work but it is important to note that AI will have significant impact on traditionally working-class labor as well. In fact, I just read a piece in the Pittsburgh Post-Gazette about Hellbender, a Pittsburgh-based electronics manufacturer, which is being touted as the “first artificial intelligence manufacturer,” whatever that means. Please follow and read Patrick’s work.

A few weeks ago, I happened upon an article appearing in the January-February 2025 issue of Harvard Business Review titled “Why People Resist Embracing AI” by Julian De Freitas. I would really encourage you to read it, especially if you don’t belong to the class of management insiders to which the article is addressed, and may not have first-hand insight into how AI is being thought about by those in charge of deploying it on a corporate scale. The article argues that, as industry necessarily shifts towards ever-greater integration of artificial intelligence into its many processes, it faces one particular obstacle of a non-technical nature: existing employees’ resistance to using AI to do their jobs. This is consistent with many surveys which reveal widespread worry among the public that AI will eventually take their jobs, compromise their personal data, or even attack humanity itself. This produces what De Freitas calls a “striking paradox”: while corporate strategists overwhelmingly report that they believe AI will become a major part of their operation over the next two years, they haven’t yet had much success incorporating it into their daily functions. “With this much cynicism about AI”, De Freitas laments, “getting workers to willingly, eagerly, and thoroughly experiment with it is a daunting task.”

De Freitas goes on to identify what he calls five “psychological barriers” which, according to over a decade of original research, are to blame for workers’ less-than-enthusiastic embrace of the technology, and he offers several suggestions for how managers might “overcome” them. These include what he calls “fundamental human perceptions that AI is too opaque, emotionless, rigid, and independent, and that interacting with humans is far more preferable”. De Freitas is clear that the purpose of his article, and the research underlying it, is to aid in “designing interventions that will increase AI adoption inside organizations and among consumers generally.”

Never once does the article use the word “inevitable”, but there can be little doubt that all it has to say about the future of work and leisure, production and consumption can be justified only against a hypothetical background of technological inevitability: AI will, and must, insert itself into every interstice of how business will be conducted and wealth will be created in the future—and management and workers alike must begin making the necessary accommodations, or risk finding themselves in that most fearful condition of having been left behind. “Unfortunately”, De Freitas mourns, “most people are pessimistic about how [AI] will shape the future.” Why exactly this should be unfortunate he doesn’t say, but we are safe to assume that it’s because the failure to welcome artificial intelligence into the workplace will mean that we won’t benefit from the prosperity which lies just on the far side of the requisite adjustments to the means by which we make the earth into a home fit for human life.

Now, a single article making this argument might perhaps be evidence of a peculiar, if not entirely unique, attitude on the part of its author towards work and the people who perform it. But if it can be said that the views it expresses represent those of corporate leadership more broadly—and I think it’s fair to make such a generalization about words which appear in the pages of the Harvard Business Review—then it’s worth paying attention to how business managers are charting the path forward with AI, and what sacrifices they think will be required to get there. If AI promises to advance the prime directive of all publicly-traded businesses—namely, to increase productivity by means of greater efficiency and to maximize the value of corporate assets—then workers’ resistance may be seen as little more than an obstacle to be overcome by whatever tactics are deemed necessary. Indeed, to the extent that people are cynical about AI and how they imagine it will affect their future, it seems that there is at least an equal measure of cynicism within the upper echelons of corporate hierarchies towards workers’ intransigence when they are asked to welcome it into their work lives.

The five barriers which De Freitas identifies are as follows, two of which I’ll single out for a bit more scrutiny:

People Believe AI Is Too Opaque

People Believe AI Is Emotionless

People Believe AI Is Too Inflexible

People Believe AI Is Too Autonomous

People Would Rather Have Human Interaction

But first, notice how each of these barriers is framed: “People…”. The problems which need to be solved, in other words, are located entirely in workers themselves and in their beliefs; never is there anything which might be worthy of consideration about the technology which they mistrust, and resistance is framed as mere bias, something which must, of course, always be corrected. (Notice, by the way, that even the title of the article—“Why People Resist Embracing AI”—suggests, and not very subtly, that embracing AI would represent some sort of default position which, for whatever perverse reason, some people “resist”.)

Setting these observations aside, let’s consider just two of these five barriers and De Freitas’ proposed solutions to them:

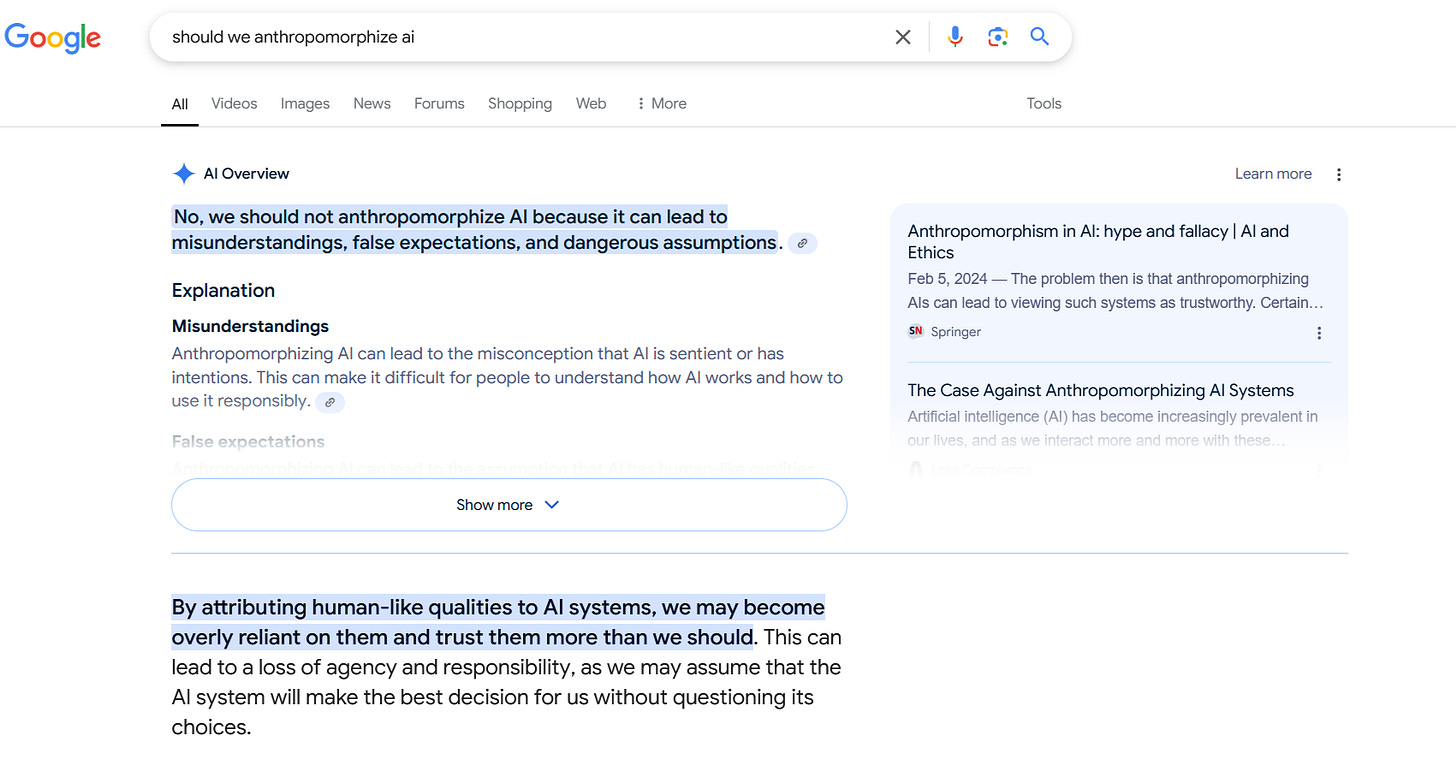

One of the barriers, as De Freitas puts it, is that “individuals who have a lower tendency to anthropomorphize AI also have less trust in AI’s abilities, leading them to resist using it.” People tend to trust in the competency of those they consider to be like themselves (in this case, human) and with whom they can have an authentically mutual relationship, and to mistrust the competencies of whatever appears simply to be masquerading as human. Naturally, the solution to this is for organizations to “promote the adoption of AI tools by anthropomorphizing them—for instance, by giving them a gender and a human name and voice.” That the bot which they are asked to work with is not, in fact, a human being is thus a reality which must be concealed, or, short of this, its salience must be minimized.

The social psychologist Sherry Turkle, who has been called the conscience of the tech world, has argued over many years that it is important never to lose sight of the counterfeit reciprocity of all our conversations with machines.1 But as is often the case, it seems that the advice of philosophers and ethicists—whose role is to consider carefully what is true, however slick the appearances, and how we should act accordingly—here finds itself at odds with the incentive to construe reality in whatever way is most expedient for the needs of business.

Another barrier impeding the adoption of AI is that employees feel disempowered by the resulting loss of control over the projects which they oversee. They are “reluctant to adopt innovations”, De Freitas says, “that seem to reduce their control over a situation.” But consolation can supposedly be found in the fact that the perception of control may be maintained with the aid of nominal gestures which flatter employees’ sense of autonomy: “Fortunately, studies find that consumers need to retain only a small amount of input to feel comfortable”, he writes. “Marketers can thus calibrate AI systems so that there is an optimal balance between perceived human control and the systems’ accuracy.” Perceived control, in other words, is what matters most when the real thing can be expected to undercut the quality of performance. This is like a parent allowing a toddler to choose their own outfit from a few preselected options in order to inflate their sense of agency without significantly affecting the anticipated outcome. This sort of enlarged sense of control may be beneficial to some children, but its superficiality can only be demeaning for adults; it is a tacit admission that the algorithm’s “judgment”—a word which can only be applied in the most metaphorical sense—is what has the trust of industry managers, and not the workers who use it.

Now, agency and responsibility are the human characteristics which make it possible in principle to adjudicate praise and blame, innocence and culpability. Only so long as the human being is conceived as the atom of the moral universe, the indivisible and irreducible unit of moral choice, is it possible to make meaningful judgments about actions which have been taken (or not taken), and to bring something like moral clarity to any situation. It has long been observed that the diffusion and obfuscation of agency in bureaucracies and other large decision-structures render this adjudication impossible, injecting a stream of moral squid-ink which conceals responsibility for bad outcomes.2 The bureaucracy is the form of corporate organization which discharges its business objectively, “according to calculable rules” and “without regard for persons” in Max Weber’s well-known formulation.3 The consequences of an employee’s false sense of agency extend well beyond the misconstrual of reality which is required to maintain the illusion; they also proceed outwards, further contaminating any attempt to pass moral judgment.

These solutions to the alleged problem of “AI-resistance” demonstrate a contempt for employees which sees the barriers currently preventing a warmer embrace of AI as mere problems to be solved, and treats basic features of their humanity as intolerable obstacles wherever these are inconsistent with enticing shortcuts. Why, then, would human workers be kept on board at all?

Well, perhaps they won’t.

You may have seen the provocative ads for Artisan, the AI startup leasing digital employees it calls “Artisans”, which began appearing around San Francisco bus stops last December.4 The ads encouraged employers to “stop hiring humans”, and declared that artificial “employees” “won’t complain about work-life balance”. The ads were surely designed to attract attention through antagonism—and if we’re talking about them, they’ve succeeded.5But whether they accomplished their goal or not, this was a case of saying the quiet part out loud; even if the ads accurately reflected the inclinations of many industry managers, you still aren’t expected to be so blunt in public.

I read the Harvard Business Review article as a similar case of a public admission—one which was more articulate, just as it was less visible—of industry’s implicit incentives: if you can’t (yet) actually replace your employees, the next best thing will be to cajole them into automating as much of themselves as possible by taming their misgivings with cynical and dishonest manipulations.

Here’s a paradox which cuts somewhat deeper than the one which the Harvard Business Review article points out: the most ardent champions of AI’s incipient foray into the workplace say that its wholesale adoption promises to call forth a world of prosperity and abundance, yet it will be able to provide this only to the extent that it dismantles the existing one.6 The push for more AI in the workplace is just the latest costume which has come to adorn the familiar logic of creative destruction: the sooner we are willing to take a hammer to the ways we are currently doing things—so the argument usually runs—the sooner a supposedly better arrangement will fall into place.

There is some truth here. Confrontations like the present standoff between management and their AI-skeptical employees disclose a reality which has long characterized modern industrial and post-industrial labor: while modern economies seek methods by which to efficiently produce and distribute goods and services for human consumption, they aren’t, at least not primarily, systems designed for human beings to work inside; the need of modern corporations and bureaucracies to employ human beings was always, in an important sense, an accidental feature, a byproduct of the simple fact that we alone possess the competencies required to keep the system running—and perhaps we couldn’t ask for better evidence of this than the fact that employees who do this kind of work are being so aggressively pushed out of the office at the first sign of technological feasibility.

The resistance to all this among many employees summons comparisons with the Luddite movement which originated in early nineteenth century England in the initial phases of industrialization.7 What makes the present episode different, however, is that, whereas the Luddites were defending their trades and their accompanying forms of life against the changes to the shape of their work introduced by machine production, the mode of labor which stands to be defended this time around is one which has already been affected—and, in many ways, belittled—by earlier industrial innovations. If there is consolation to be found in the entry of artificial intelligence into the post-industrial workplace, then, it is that it seems to provide an unexpected offramp from all this: an opportunity to abolish some or all of the most menial office work which many people are currently stuck doing, turning it over instead to the quintessential post-industrial technology, and allowing them to do something more befitting of their humanity. AI is thus offered as the improbable remedy for afflictions caused by the very economy which has created it in the first place.

As I have written before, following an essay by L. M. Sacasas, the image which comes to mind here is that of the ouroboros, a snake circularly devouring its own tail: the mouth of advanced techno-industrialism consumes itself, including the pathological forms of labor in which many have become entangled, leaving all of us liberated, leisured, longer-lived, and newly oriented towards the free exercise of creativity.8 This is one way of describing the promise which is identified in the title of Aaron Bastani’s 2018 book, Fully Automated Luxury Communism, which describes a new economics of “extreme supply” which will become possible once machines take over virtually all economic production—an eventuality which Bastani considers to be just around the corner.9 The labor movement should reorganize itself, as Bastani puts it, into “a workers’ party against work”, one which aims for the abolition of all labor rather than simply the amelioration of its conditions and wages.10 In other words, it shouldn’t just be managers welcoming the new cohort of AI Artisans, but workers themselves.

The problem with this story is that the managerial jobs which stand to be replaced and the technology being offered to accomplish this are not actually as opposed to each other as this framing would suggest: Each is an expression of the same logic which created the other. Properly understood, both embody the same dubious assumptions about work and its place within a meaningful life—and until we can account for this, we will continue to reproduce the kinds of systems which these assumptions encourage. The serpent may consume itself, but both ends belong to one and the same organism.

Here’s an alternative explanation for the widespread suspicion of AI which De Freitas doesn’t consider: I suspect that people “resist” adopting AI in the workplace at least in part out of an insight that runs deeper than a fear of losing their paycheck. Perhaps they also perceive that they are being driven into an unwelcome obsolescence by the imperatives of a system which, despite its claims to have their best interests at heart, obviously doesn’t—and its insistence on suppressing their capacities for thinking and judging, agency and responsibility is perhaps the surest sign that AI has been designed to consume human affairs along with those of the machine.

I suspect that the problem with modern labor has less to do with the fact of its existence, and even with its continued necessity, and rather more to do with the ways in which it has been trivialized and diminished, and has lost its place within the larger weave of a life lived with purpose, embedded within the reciprocities of a community and employing the faculties which labor both requires and instills.

As Anthony Scholle concluded in a recent essay for The Savage Collective, if the technologists are to be believed, the post-work future is really more of a post-job future: “[W]ork will remain central to human flourishing”, he writes, “and to our integration as embodied souls, as whole persons, oriented toward the Good. Jobs may fade, but there will always be work as we act to love our neighbors.” It was not the intention of the technologists who have developed artificial intelligence, nor of the managers who are trying to implement it, to reveal the value of labor. But this, in effect, is what they have done. Perhaps we should be grateful to them—not so much because they offer anything of real value, but because they are helping us to uncover opportunities to reconsider the premises which have limited our appreciation of labor, preparing us, perhaps, to walk away from them.

For a detailed explanation of how this came to be so, see the recent book The Unaccountability Machine by Dan Davies. Here is how Davies describes the book’s purpose:

This is a book about the industrialisation of decision-making—the methods by which, over the last century, the developed world has arranged its society and economy so that important institutions are run by processes and systems, operating on standardised sets of information, rather than by individual human beings reacting to individual circumstances. This has led to a fundamental change in the relationship between decision makers and those affected by their decisions, the vast population of what might be called ‘the decided-upon’. That relationship used to be what we called ‘accountability’, and this book is about the ways in which accountability has atrophied. (Dan Davies, The Unaccountability Machine, pg. 3)

A little later in the book, he introduces the concept of the “accountability sink”, which involves:

the delegation of the decision to a rule book, removing the human from the process and thereby severing the connection that’s needed in order for the concept of accountability to make sense. (Dan Davies, The Unaccountability Machine, pg. 17)

Max Weber, Economy and Society, pg. 975.

Artisan’s Y-Combinator page concludes with the assertion that “This is the next Industrial Revolution.”

You can read the company’s own account of how these ads came to be, which concludes with a good deal of walk-back, here.

I feel compelled to mention that I am aware that this is not a paradox in the logical sense, but really more of a conundrum.

The best and most thorough accounting of the connections between these two eras is

Brian Merchant’s 2023 book, Blood in the Machine: The Origins of the Rebellion Against Big Tech. Merchant writes in the book’s introduction:

Now, two hundred years later in the United States, we’re edging up to the brink again.

Working people are staring down entrepreneurs, tech monopolies, and venture capital firms that are hunting for new forms of labor-saving tech—be it AI, robotics, or software automation—to replace them. They are again faced with the prospect of losing their jobs to the machine.

Today, the entrepreneur who takes a risk to disrupt an industry is widely celebrated; venture capital firms look for unicorn ideas that shrink a market while consolidating their control. And automation is often presented as an unfortunate but unavoidable outcome of “progress,” a reality that workers in any technologically advancing society must simply learn to adapt to.

But in the 1800s, automation was not seen as inevitable, or even morally ambiguous. Working people felt it was wrong to use machines to “take another man’s bread,” and so thousands rose up in a forceful, decentralized resistance to smash them. The public cheered these rebels, and for a time they were bigger than Robin Hood, and more powerful.

They were known as the Luddites. And they launched what was “perhaps the purest of English working-class movements, truly popular of the people,” as one historian put it. Their targets were the entrepreneurs, factory owners, and other facilitators and profiteers of automation.

Even though that uprising took place two centuries ago, it contained the seeds of a conflict that continues to shape our relationship to work and technology today. In many ways, our future still depends on the outcome of that conflict.

At the time, I wrote that:

we can regard the changes that artificial intelligence will bring as liberatory and ennobling only to the extent that they ultimately undermine the very ways of thinking that are presently bringing AI itself into the world: in short, it must consume the various systems that require us to view ourselves as machines, but spare every jot of the humanity that was ours to begin with, and which this transformation has promised to restore. But if there’s any reason to suspect that the future will bear the traces of the manipulative tactics being employed to get us there—and such evidence is abundant—then there is little reason to cooperate in bringing it about.

Fully automated luxury communism, Bastani insists,

is a map by which we escape the labyrinth of scarcity and a society built on jobs; the platform from which we can begin to answer the most difficult question of all, of what it means, as Keynes once put it, to live ‘wisely and agreeably and well’. (Aaron Bastani, Fully Automated Luxury Communism, pg. 243.)

At one point, Bastani boldly suggests that:

Under FALC, we will see more of the world than ever before, eat varieties of food we have never heard of, and lead lives equivalent—if we so wish—to those of today’s billionaires. Luxury will pervade everything as society based on waged work becomes as much a relic of history as the feudal peasant and medieval knight. (Aaron Bastani, Fully Automated Luxury Communism, pg. 189)

Aaron Bastani, Fully Automated Luxury Communism, pg. 194 (italics mine).

Nice read. I'm reminded of an article from over a decade ago by an Israeli academic who was inquiring about the future of the "useless class," or people who had no role in productive labor. He argued that the average person in the future must be handled by offering simple sustenance and creating satisfaction through drugs and video games (https://www.cnn.com/2014/09/17/opinion/opinion-tomorrow-transformed-harari/index.html). I think that gets to the core of your argument here: the managerial class looks at human satisfaction as an obstacle to be solved (through technical means specifically) instead of the actual goal of working to improve the world. Which does leave a question: what *is* the goal of making industry more productive and efficient, if not human happiness?